Binary Convolutional neural network by XNOR.AI

Binary Convolutional neural network by XNOR.AIGreat idea to save memory and computation by different type number representation. Convolutional neural networks are expensive for its memory needs, specific HW and computational power. This simple trick is able to bring this networks to less power devices.

Unique solution?

I am thinking about this. Let me know in the comments how unique is this solution. In the automotive industry embedded HW solutions close to this one already exist.. In convolution layers is this unique, I guess.

In GPU there is different types of registers to be able to calculate FLOAT16 faster than FLOAT32 bit representation. This basically brings something like representing Real 32-bit numbers as a Binary number. This brings the 32x memory-saving information. Say in another way, They introduce an approximation of Y=WX

This could be input vector x multiplied by w weight metrics for each

layer. W and X are real numbers. Float32 or Float 16. The approximation looks likeY=αβWX

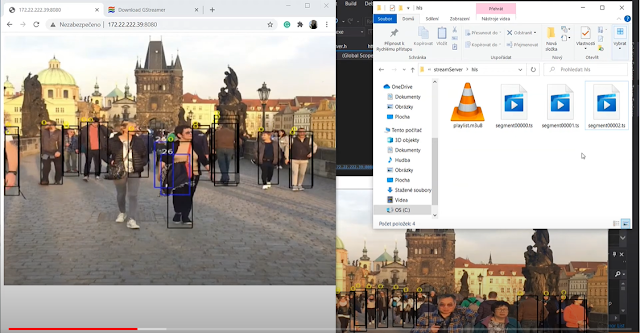

alpha is scalar numbers, beta is a matrix, and W and X are just binary. Lower precision but maybe enough power for some applications. Instead of approximate already learned network BWN. They bring this into the learning of the network. Computing convolution layers during the forward pass is also much more effective, and less-power CPU devices could handle the problem in real-time.

Binary representation Binary field in C

I am going deeper into this technology.. Let me introduce some operations with binary arrays in C.. This is an exciting area. Not so special. Maybe you are programming only in higher languages like Java, python and how to set only one particular bit in C should be interesting for you. It is for me.. There is no direct support for this..

int A[2]; when your int is a size of 32-bit, You have two integers array. You can store 64 bits. If you represent an image in only black and white is quite limited to representing good features. If you represented this for each color and convolution filter. The representation is not so boring..

One convolution layer should be stored in memory size / 32.

int A[2];

Set first bit of A

setBit(A, 1);

Set fifth bit of A

setBit(A, 5);

void setBit(int *Field,int n) {

Field[n / 32] |= 1 << (n % 32);

}

void clearBit(int *Field, int n) {

Field[n / 32] &= ~(1 << (n % 32));

}

This 1 is like only move this 00001 to the right place by << bit shift. The magic is

based on the conditions in this expression shift 1 Hexa, 00001 B to the right position.

000000000 set 0001 to n = 3 ->000001000.

By this principle is possible to write a print of the array and almost all you need.

Resources

Look at the founded company using this technologyhttps://xnor.ai

Check also GitHub

There is some integration into the TORCH, I think also Yolo, and darknet but this needs some extension for already existing layers.https://github.com/allenai/XNOR-Net