Opencv HSL video stream to web

This tutorial will show you all the components, configuration, and code needed to steam video output results from Opencv C++ to your Web player. The C++ program will take any video input and process this video. The processed video will be streamed outside of OpenCV using the GStreamer pipeline (Windows part). The HLS video produces one Playlist file and several MPEG-TS segments of videos. HLS outputs are stored in the Windows file system. I am using WSL 2, a Windows Subsystem for Linux to run the Ubuntu distribution. Here the NGINX is installed with the RTMP module. NGINX is distributing a video stream from the Windows file system to the web. Let's have a look at this in more detail.

What is covered?- Opencv C++ part + GStreamer pipeline

- NGINX configuration

- Architecture

- Web Player for your HLS stream

- Detailed installation of Opencv + GStreamer more here GStreamer installation , GStreamer Installation 2 on windows

- Detailed installation of NGINX + RTMP module

- Installation of WSL 2, UBUNTU

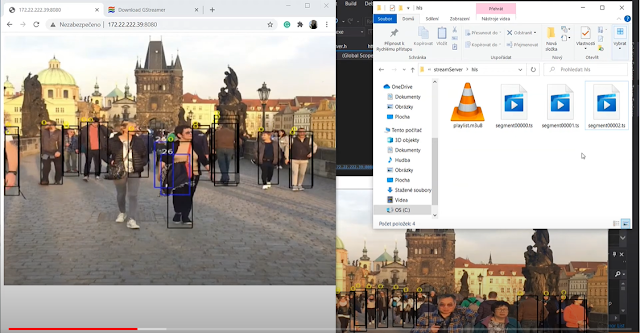

The architecture of the streaming system

This reads video from a file or video stream by VideoCapture cap("/samp.MOV");

Video is processed in the C++ app. In my case, there is a very simple Yolo Darknet detector implemented. The results of the detector are written into the video frame. The processed video frame is pushed to GStreamer pipeline used in the OpenCV VideoWriter writer. The HLS pipeline stores HLS fragments and playlists on the Windows file system. The NGINX server in UBUNTU WLS 2 distributes this content from the Windows file system to the web. This is possible as the file system from Windows c:/ is reachable under /mnt/c/.

Installation and prerequisites for the Opencv app

1) Compile OpenCV with GStreamer. GStreamer OpenCV on Windows or Here.

2) Enable WSL 2 virtualization in Windows. Install Ubuntu 20.04.1. I tested WSL 2. I am not sure about the WSL 1 file system sharing with Windows.

3) NGINX with RTMP module in UBUNTU WSL 2

There are some standard prerequisites like Visual Studio 2019. Knowledge of Linux and be able to find documentation to enable WSL 2 and install NGINX with RTMP module. It is a lot.

HLS protocol brief introduction

HLS stands for HTTP Live Streaming. The protocol was introduced by Apple and become quite popular over the years. The major advantage is the ability to adapt the video streams based on network throughput. The HLS in version lower than 4 is putting video into the MPEG-TS containers. This file with .TS is holding the actual media content. The TS are segments of videos with defined length. The video segments are organized in the playlist. The playlist holds the reference to the MPEG-TS segments.

Advantages of HTTP streaming

One of the significant advantages is the HTTP protocol itself. The HTTP can traverse easily the firewall and proxy servers. This is the main advantage over the UDP-based RTP and RTSP protocol. The standard support as well as a secured transfer based on HTTPS.

HLS MPEG-TS

The video in GStreamer is encoded by H.264 together with other audio coded and placed into a file container called MPEG-2 Transport Stream. The stream chunks are of the same length as defined in GStreamer.

HLS playlist M3U8

This simply refers to concrete file.TS to play the actual video content.

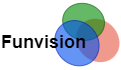

GStreamer Opencv pipeline

GStreamer needs to be properly set up, all environmental path variables need to be correct and OpenCV needs to be compiled with OpenCV. ! To use x264enc and his use full installation of GStreamer and not the recommended one. The standard OpenCV VideoWriter pipeline can look like this. Appssrc means that your C++ OpenCV program is the source for GStreamer. Then the following values are video conversion and scale. The x264enc is the encoding of the video output and is packed in mpegtsmux containers (ts segments). HLSSINK then produces an HLS playlist and TS segments from your video. You need to point to the location shared with your UBUNTU in the location and playlist. This will produce several ts segments and one playlist. The Playlist root is the value that needs to be updated based on IP and PORT. 172.22.222.39:8080 is IP and PORT depends on the NGINX and UBUNTU networking setup. This value is stored in the playlist and points to concrete TS segments. Both Playlist and Segments then need to be correctly served by the NGINX server.

Videowriter GStreamer pipeline example

Just remember to update locations and IP and PORTS in this example as described in previous text.

How to deliver a Playlist and content?

The content stored in Windows location C:/streamServer/hls/ needs to be enabled and reachable by a simple web player. This requires the server to provide a few things. 1) Web page with your simple video player. 2) The HLS playlist and ts segments are reachable under same IP address. This task is fully under the control of the NGINX server running under Ubuntu in WSL 2. The NGINX needs to be compiled with RTMP module.

I am not an expert on NGINX. HLS is on in my rtmp module and hls_path points to the location of the content generated from opencv.

hls_path /mnt/c/streamServer/hls;

In part related to HTTP is server enabled to port 8080 of the local host, where index.html is available under / location and hls playlist is available under /live

NGINX config for HLS streaming

Web player

I just found some examples based on video.js and videojs-contrib-hls/5.14.1/videojs-contrib-hls.js specifically for HLS. All you need to change is IP:PORT address in the video HTML element src="http://172.22.222.39:8080/live/playlist.m3u8. This is exactly the same location set by NGINX to serve the playlist.

C++ opencv gstreamer windows part

The input video from video capture is processed by the Yolo darknet neural network. you can find more about this Yolo darknet detection. VideoWriter writer is using the discussed gstreamer pipeline and once the video is processed the output is pushed to the pipeline just like this.

Just be sure that the size of the input image and the sizes in your pipeline match. Remember to change IP and PORT as discussed in the chapter about pipeline and NGINX.

YouTube tutorial about problems with Opencv HLS streaming

This tutorial will show you the main problems during the development of such a streaming service.