Opencv Web camera and Video streams in Windows subsystem for Linux WSL, by FFmpeg and GStreamer

Opencv in Windows Subsystem for Linux (WSL) is a compatibility layer designed to run Linux binary executables (in ELF format) natively on Windows 10. I love it. There are some limitations to mention. The first biggest is the lack of support of CUDA, which could be a limitation for deep learning applications and learning in WSL. The second trouble for Opencv development is the lack of Web camera support. This suspends WSL almost on a useless level for me until now.

VideoCapture cap; is not working in WSL for now

cap.open(0);  |

| FFMPEG to WSL opencv program and back to WEB browser in Windows |

Check the goal of this opencv tutorial on this video

What you will learn about OpenCV and FFMPEG

- Stream video from Windows to Linux in the WSL environment.

- Capture video stream in Linux opencv application

- Opencv compiled in Linux with GStreamer and FFmpeg

- Stream video processing output by GStreamer back to Windows

- Present video stream from opencv in web browser HTML 5 video player

Architecture, Behind opencv VideoCapture web camera

There are Windows 10, and Ubuntu Linux running on Hyper-V installed easily from the Windows store. Windows has installed FFmpeg. Linux has installed FFmpeg, GStreamer, and OpenCV build from source with the support of both mentioned. Video capture is done by FFmpeg from Windows. Video is coded by the H264 codec and sent to localhost UDP. UPD://127.0.0.1:5000. Video is captured in OpenCV by VideoCapture cap.open("UDP://127.0.0.1:5000"). The opencv is installed with GStreamer by VideoWriter stream("send video to 127.0.0.1:5010"). The video is captured back in Windows by ffmpeg where the source is RTP:127.0.0.5010 into MyOutput.mp4. This is the whole pipeline. You can capture streams produced from Opencv on the Web. We will get there as well.

|

| Opencv FFMPEG GStreamer for WSL to WEB |

Install GStreamer apt-get (Linux)

The following commands are valid for Ubuntu 18.04. running under Windows subsystem for Linux. I am not sure about any special package repository to install GStreamer. I think that a special repository is needed to install extra support for H.265 codecs for example. Google for more information or send me a message if you failed to install GStreamer. it is important.

sudo apt-get install gstreamer1.0*

sudo apt install ubuntu-restricted-extras

sudo apt install libgstreamer1.0-dev libgstreamer-plugins-base1.0-dev

Build and compile FFMPEG (Linux)

I am actually building FFMPEG from the source. Install some needed package by apt-get. Download source by wget, untar, configure build and compile by make. This is clear. It is not the basic tutorial.

sudo apt-get -y install git make nasm pkg-config libx264-dev libxext-dev

libxfixes-dev zlib1g-dev

sudo wget -O ffmpeg-2.8.tar.bz2 "https://www.ffmpeg.org/releases/ffmpeg-2.8.tar.bz2"

libxfixes-dev zlib1g-dev

sudo wget -O ffmpeg-2.8.tar.bz2 "https://www.ffmpeg.org/releases/ffmpeg-2.8.tar.bz2"

sudo tar -xvf ffmpeg-2.8.tar.bz2

sudo rm -Rf ffmpeg-2.8.tar.bz2 cd /home/nomce/libs/ffmpeg-2.8

./configure --enable-nonfree --enable-gpl --enable-libx264

--enable-x11grab --enable-zlib

--enable-x11grab --enable-zlib

make -j2

sudo make install

sudo ldconfig -v

sudo ldconfig -v

If you are not able to install the whole FFMPEG make sure that libavcodec-dev, libavformat-dev, libswscale-dev are installed. This should be fine to receive a video stream by cap.open("UDP://127.0.0.1:5000");.

Install FFMPEG in Windows

We are supposed to capture a web camera by FFMPEG in Windows and send the stream into Linux. We need to install the FFMPEG as well on the Windows machine. Just download FFmpeg for Windows here.

Compile OpenCV with FFMPEG and GStreamer (Linux)

This is pretty much as the OpenCV team describes the installation under Linux. There is just one difference in CMAKE configuration -D WITH_GSTREAMER=ON -D WITH_FFMPEG=ON.

sudo apt-get install build-essential

sudo apt-get install cmake git libgtk2.0-dev pkg-config libavcodec-dev

libavformat-dev libswscale-dev

sudo apt-get install python-dev python-numpy libtbb2

libtbb-dev libjpeg-dev libpng-dev libtiff-dev libjasper-dev libdc1394-22-dev

git clone https://github.com/opencv/opencv.git

cd ~/opencv

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=RELEASE -D CMAKE_INSTALL_PREFIX=/usr/local

-D WITH_GSTREAMER=ON -D WITH_FFMPEG=ON ..

The output of CMAKE configuration should include FFMPEG and GStreamer. Otherwise, you can not continue and achieve the goal of this tutorial.

-- Video I/O:

-- DC1394: YES (2.2.5)

-- FFMPEG: YES

-- avcodec: YES (57.107.100)

-- avformat: YES (57.83.100)

-- avutil: YES (55.78.100)

-- swscale: YES (4.8.100)

-- avresample: YES (3.7.0)

-- GStreamer: YES (1.14.5)

-- v4l/v4l2: YES (linux/videodev2.h)

Once you achieve that Cmake configuration output contains FFMPEG and GStreamer compile and install opencv by following. This can take some time up to your machine.

make -j8sudo make install

Check your Opencv libraries installation

Your first simple program should be the following code. It will tels you of your opencv libs are OK.

source.cpp

int main(int argc, const char** argv)

{

std::cout << cv::getBuildInformation() << std::endl;

This source.cpp should be configured for compilation by CMakeLists.txt placed in same folder as your source.

cmake_minimum_required(VERSION 2.8)

project( ProjectName )

find_package( OpenCV REQUIRED )

add_executable( ProjectName source.cpp )

target_link_libraries( ProjectName ${OpenCV_LIBS} )

The results can look like this and important is part of Video I/O:, where FFMPEG and GStreamer should be lister with YES and version.

General configuration for OpenCV 4.1.2-dev =====================================

Version control: 4.1.2-121-g5dd3e6052e

Platform:

Timestamp: 2019-11-09T13:55:51Z

Host: Linux 4.4.0-17134-Microsoft x86_64

CMake: 3.10.2

CMake generator: Unix Makefiles

CMake build tool: /usr/bin/make

Configuration: RELEASE

CPU/HW features:

Baseline: SSE SSE2 SSE3

requested: SSE3

Dispatched code generation: SSE4_1 SSE4_2 FP16 AVX AVX2 AVX512_SKX

requested: SSE4_1 SSE4_2 AVX FP16 AVX2 AVX512_SKX

SSE4_1 (16 files): + SSSE3 SSE4_1

SSE4_2 (2 files): + SSSE3 SSE4_1 POPCNT SSE4_2

FP16 (1 files): + SSSE3 SSE4_1 POPCNT SSE4_2 FP16 AVX

AVX (5 files): + SSSE3 SSE4_1 POPCNT SSE4_2 AVX

AVX2 (29 files): + SSSE3 SSE4_1 POPCNT SSE4_2 FP16 FMA3 AVX AVX2

AVX512_SKX (6 files): + SSSE3 SSE4_1 POPCNT SSE4_2 FP16 FMA3 AVX AVX2

C/C++:

Built as dynamic libs?: YES

C++ Compiler: /usr/bin/c++ (ver 7.3.0)

C++ flags (Release): -fsigned-char -W -Wall -Werror=return-type

C++ flags (Debug): -fsigned-char -W -Wall -Werror=return-type

C flags (Release): -fsigned-char -W -Wall -Werror=return-type -Werror=

Linker flags (Release): -Wl,--gc-sections

Linker flags (Debug): -Wl,--gc-sections

ccache: NO

Precompiled headers: NO

Extra dependencies: dl m pthread rt

3rdparty dependencies:

OpenCV modules:

To be built: calib3d core dnn features2d flann gapi highgui

Disabled: world

Disabled by dependency: -

Unavailable: java js python2 python3

Applications: tests perf_tests apps

Documentation: NO

Non-free algorithms: NO

GUI:

GTK+: YES (ver 2.24.32)

GThread : YES (ver 2.56.4)

GtkGlExt: NO

VTK support: NO

Media I/O:

ZLib: /usr/lib/x86_64-linux-gnu/libz.so (ver 1.2.11)

JPEG: /usr/lib/x86_64-linux-gnu/libjpeg.so (ver 80)

WEBP: build (ver encoder: 0x020e)

PNG: /usr/lib/x86_64-linux-gnu/libpng.so (ver 1.6.34)

TIFF: /usr/lib/x86_64-linux-gnu/libtiff.so (ver 42 / 4.0.9)

JPEG 2000: build (ver 1.900.1)

OpenEXR: /usr/lib/x86_64-linux-gnu/libImath.so /

HDR: YES

SUNRASTER: YES

PXM: YES

PFM: YES

Video I/O:

DC1394: YES (2.2.5)

FFMPEG: YES

avcodec: YES (57.107.100)

avformat: YES (57.83.100)

avutil: YES (55.78.100)

swscale: YES (4.8.100)

avresample: YES (3.7.0)

GStreamer: YES (1.14.5)

v4l/v4l2: YES (linux/videodev2.h)

Parallel framework: pthreads

Trace: YES (with Intel ITT)

Other third-party libraries:

Intel IPP: 2019.0.0 Gold [2019.0.0]

at: /home/vlada/opencv/opencv/build/3rdparty/ippicv

Intel IPP IW: sources (2019.0.0)

at: /home/vlada/opencv/opencv/build/3rdparty/ippicv

Lapack: NO

Eigen: NO

Custom HAL: NO

Protobuf: build (3.5.1)

OpenCL: YES (no extra features)

Include path: /home/vlada/opencv/opencv/3rdparty/include/opencl/1.2

Link libraries: Dynamic load

Python (for build): /usr/bin/python2.7

Java:

ant: NO

JNI: NO

Java wrappers: NO

Java tests: NO

Install to: /usr/local

General configuration for OpenCV 4.1.2-dev =====================================

Version control: 4.1.2-121-g5dd3e6052e

Platform:

Timestamp: 2019-11-09T13:55:51Z

Host: Linux 4.4.0-17134-Microsoft x86_64

CMake: 3.10.2

CMake generator: Unix Makefiles

CMake build tool: /usr/bin/make

Configuration: RELEASE

CPU/HW features:

Baseline: SSE SSE2 SSE3

requested: SSE3

Dispatched code generation: SSE4_1 SSE4_2 FP16 AVX AVX2 AVX512_SKX

requested: SSE4_1 SSE4_2 AVX FP16 AVX2 AVX512_SKX

SSE4_1 (16 files): + SSSE3 SSE4_1

SSE4_2 (2 files): + SSSE3 SSE4_1 POPCNT SSE4_2

FP16 (1 files): + SSSE3 SSE4_1 POPCNT SSE4_2 FP16 AVX

AVX (5 files): + SSSE3 SSE4_1 POPCNT SSE4_2 AVX

AVX2 (29 files): + SSSE3 SSE4_1 POPCNT SSE4_2 FP16 FMA3 AVX AVX2

AVX512_SKX (6 files): + SSSE3 SSE4_1 POPCNT SSE4_2 FP16 FMA3 AVX AVX2

To be built: calib3d core dnn features2d flann gapi highgui

Disabled: world

Disabled by dependency: -

Unavailable: java js python2 python3

Applications: tests perf_tests apps

Documentation: NO

Non-free algorithms: NO

GUI:

GTK+: YES (ver 2.24.32)

GThread : YES (ver 2.56.4)

GtkGlExt: NO

VTK support: NO

Media I/O:

ZLib: /usr/lib/x86_64-linux-gnu/libz.so (ver 1.2.11)

JPEG: /usr/lib/x86_64-linux-gnu/libjpeg.so (ver 80)

WEBP: build (ver encoder: 0x020e)

PNG: /usr/lib/x86_64-linux-gnu/libpng.so (ver 1.6.34)

TIFF: /usr/lib/x86_64-linux-gnu/libtiff.so (ver 42 / 4.0.9)

JPEG 2000: build (ver 1.900.1)

HDR: YES

SUNRASTER: YES

PXM: YES

PFM: YES

Video I/O:

DC1394: YES (2.2.5)

FFMPEG: YES

avcodec: YES (57.107.100)

avformat: YES (57.83.100)

avutil: YES (55.78.100)

swscale: YES (4.8.100)

avresample: YES (3.7.0)

GStreamer: YES (1.14.5)

v4l/v4l2: YES (linux/videodev2.h)

Parallel framework: pthreads

Trace: YES (with Intel ITT)

OpenCL: YES (no extra features)

Include path: /home//opencv/opencv/3rdparty/include/opencl/1.2

Link libraries: Dynamic load

Python (for build): /usr/bin/python2.7

Java:

ant: NO

JNI: NO

Java wrappers: NO

Java tests: NO

Install to: /usr/local

-----------------------------------------------------------------

FFMPEG basics for this tutorial

FFMPEG is very powerful and this is very restricted to our purpose. You will definitely use the following commands.FFMPEG lists available devices

This command tells you information about microphones, and cameras you can capture by FFMPEG

ffmpeg -list_devices true -f dshow -i dummy

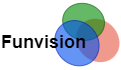

Stream from FFMPEG to VLC first

This example will just perform the video stream from your web camera to the VLC player. The FFMPEG command will use input source -i as an integrated camera (web camera). The bit rate for the video stream should be chosen according to the used codec and resolution. This setting means video bitrate 2014 kbit/s -b:v 2014k. I used codec H.264 as clear by options -vcodec libx264. I hope that this helps -preset ultrafast -tune zero latency as well. -ar is an audio sampling frequency rate. The full command is as follows.

C:\ffmpeg\bin>ffmpeg -f dshow -i video="Integrated Camera"

-preset ultrafast -tune zerolatency -vcodec libx264 -r 10

-b:v 2014k -s 640x480 -ab 32k -ar 44100 -f mpegts -flush_packets

0 udp://192.168.0.116:5120?pkt_size=1316

The task in VLC is much easier. Open the Network stream and put here udp://@192.168.0.116:5120. Just make sure about @. The result is a video from a web camera displayed in the VLC player.

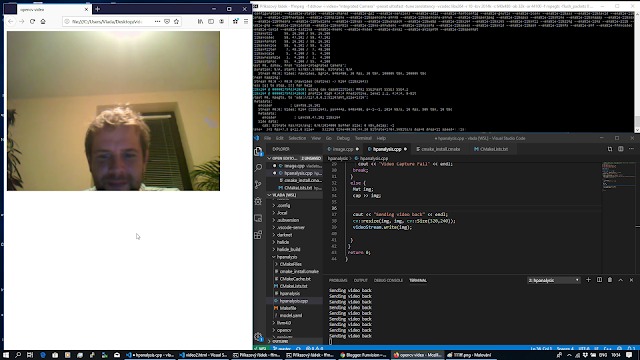

Stream from FFMPEG Web camera to Opencv under WSL

Now connect FFmpeg and stream video into the WSL opencv program. The first is to start web camera streaming like in the previous case in the VLC example.

ffmpeg -list_devices true -f dshow -i dummy

C:\ffmpeg\bin>ffmpeg -f dshow -i video="Integrated Camera"

-preset ultrafast -tune zerolatency -vcodec libx264 -r 10

-b:v 2014k -s 640x480 -ab 32k -ar 44100 -f mpegts -flush_packets

0 udp://192.168.0.116:5120?pkt_size=1316

Check the new article for VideoWriter https://funvision.blogspot.com/2021/12/compile-opencv-4-with-gstreamer-on.html

#include <stdio.h>

#include <opencv2/opencv.hpp>

#include <opencv2/objdetect/objdetect.hpp>

#include <opencv2/videoio/videoio.hpp>

#include <opencv2/imgcodecs/imgcodecs.hpp>

using namespace cv;

using namespace std;

int main(int argc, const char** argv)

{

std::cout << cv::getBuildInformation() << std::endl;

VideoCapture cap("udp://192.168.0.116:5120");

VideoWriter videoStream("appsrc ! videoconvert ! videoscale !"

"video/x-raw,width=320,height=240 ! theoraenc !"

"oggmux ! tcpserversink host=192.168.0.116 port=8080"

"recover-policy=keyframe sync-method=latest-keyframe sync=true"

,CAP_GSTREAMER,0,5,Size(320,240),true);

for (;;)

{

if (!cap.isOpened()) {

cout << "Video Capture Fail" << endl;

break;

}

else {

Mat img;

cap >> img;

cap >> img;

cout << "Sending video back" << endl;

cv::resize(img, img, cv::Size(320,240));

videoStream.write(img);

}

}

return 0;

}

Once your program is compiled and FFMPEG sending video from windows. just run the code above in Linux. Once you receive a video frame from windows the "Sending video back" message will be displayed. Do not capture by VLC!! I have some problems to capture video stream from linux back in windows by VLC. Use the simple code below.

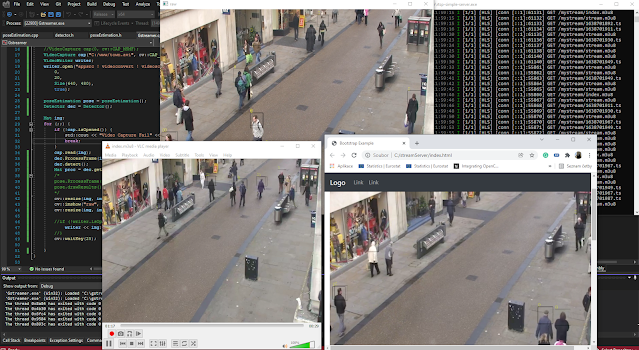

Receive display opencv video stream on Web

The simple web site is just a simple Html 5 video player, where the source is my video stream rtsp://192.168.0.116:8080.

<!DOCTYPE html>

<html>

<head>

<meta http-equiv="content-type" content="text/html; charset=utf-8">

<title>opencv video</title>

</head>

<body>

<video id="video1" width=640 height=480 controls>

<source src="rtsp://192.168.0.116:8080">

</video>

<script>

var myVideo = document.getElementById("video1");

function playPause() {

if (myVideo.paused)

myVideo.play();

else

myVideo.pause();

}

function makeBig() {

myVideo.width = 560;

}

function makeSmall() {

myVideo.width = 320;

}

function makeNormal() {

myVideo.width = 420;

}

</script>

</script>

</body>

</html>

Thank you, for covering the following topics

- capture video stream in OpenCV,

- send video from windows,

- stream opencv result to a web browser

- reach web camera in WSL

Have you tried to recreate this within WSL2?